YOLO is an extremely fast object detection algorithm proposed in 2015. If you want to know more about the details, check my paper review for YOLOv1: YOLOv1 paper review

In this post, we will implement the full YOLOv1 with PyTorch.

References

The YOLOv1 video by Aladdin Persson was super helpful and I learned a lot from him. My train.py is mostly the same as his code. However, I wanted to make codes easier to understand and intuitive so I implented mostly from scratch except train.py and model.py. You can follow along with the comments.

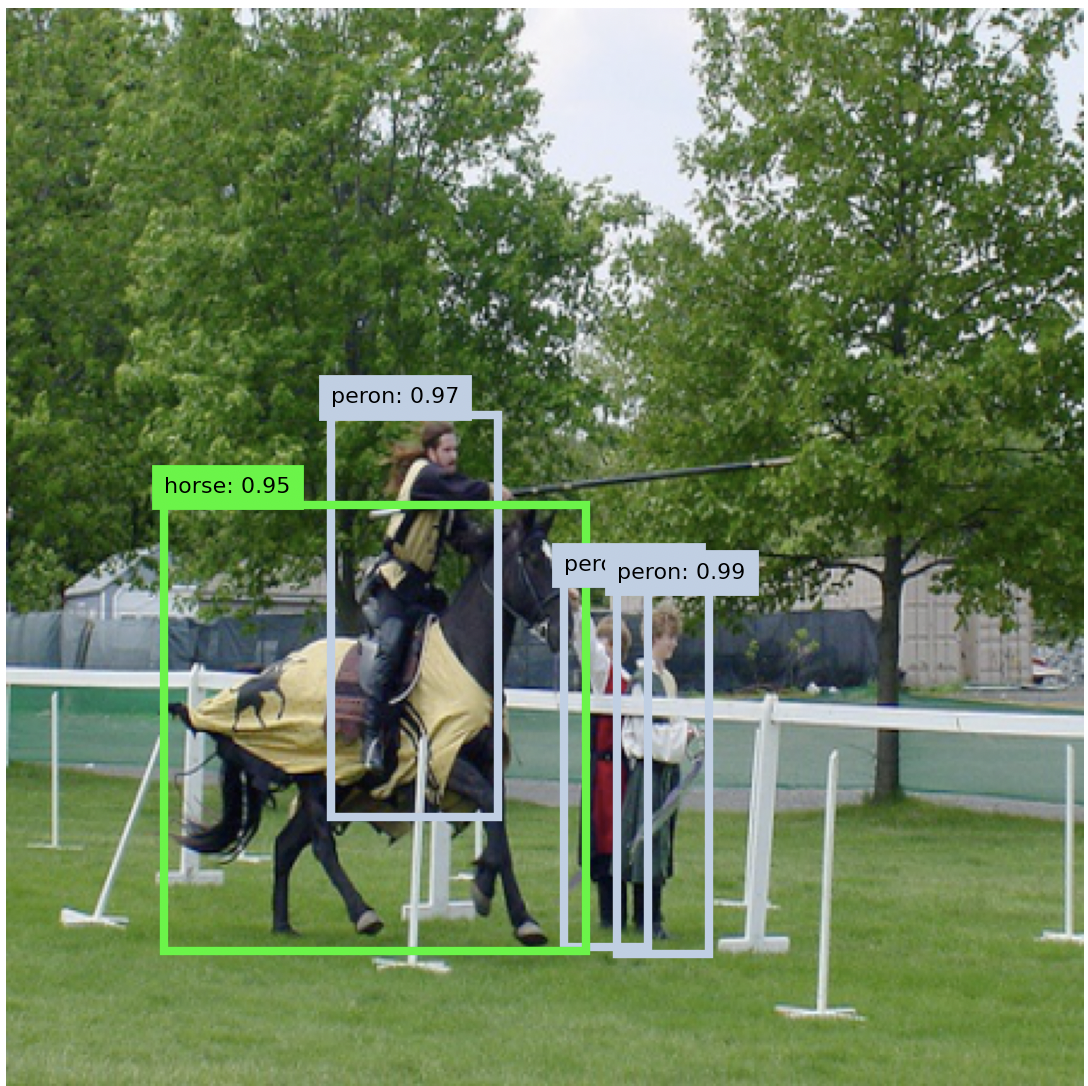

The model was overfitted for 8examples.csv to see if it works. Below is one predicted bounding box example of the model.

Module Structure

dataset.pyloss.pyutils.pymodel.pytrain.pyplot_image.py:

dataset.py

You can download the dataset here: Link. Note that the labels are in the format (class labe, x, y, w, h) but relative to the whole image. Therefore, we need to convert the labels relative to each grid cell.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

import torch

from torch.utils.data import Dataset

import pandas as pd

from PIL import Image

import os

class VOCDataset(Dataset):

def __init__(self, data_csv, img_dir, label_dir, S=7, B=2, C=20, transform=None):

'''

Parameters:

data_csv (str): csv file path name which has two columns.

1st column: img file name

2nd column: label file name

img_dir (str): image directory path

label_dir (str): label directory path

S (int): Grid cell size (ex. 7x7)

B (int): Number of bounding boxes per one cell

C (int): Number of classes

transform (Compose): A list of transforms

'''

self.df = pd.read_csv(data_csv)

self.img_dir = img_dir

self.label_dir = label_dir

self.S = S

self.B = B

self.C = C

self.transform = transform

def __len__(self):

'''

Returns the length of the dataset

'''

return len(self.df)

def __getitem__(self, idx):

'''

Returns one data item with idx

Parameters:

idx (int): Index number of data

Returns:

tuple: (image, label) where label is in format of

(S, S, 30). Note that last 5 elements of label

will NOT be used.

'''

# Path for image and label

img_path = os.path.join(self.img_dir, self.df.iloc[idx, 0])

label_path = os.path.join(self.label_dir, self.df.iloc[idx, 1])

# Read image

image = Image.open(img_path)

# Read bounding boxes from label .txt file and store them to list

bboxes = []

with open(label_path, 'r') as f:

while True:

line = f.readline()

if not line:

break

# class_num, x, y, w, h

one_box = line.replace('\n','').split(' ')

# convert types to int or float accordingly

one_box = [

float(el) if float(el) != int(float(el)) else int(el)

for el in one_box

]

bboxes.append(one_box)

bboxes = torch.tensor(bboxes)

if self.transform:

image, bboxes = self.transform(image, bboxes)

bboxes = bboxes.tolist()

'''

Loop through each box and scale relative to grid cell.

Formula is quite straightforward.

cell_w = S * w

cell_h = S * h

cell_x = S * x - floor(x * S)

cell_y = S * y - floor(y * S)

'''

label_matrix = torch.zeros((self.S, self.S, 30)) # last 5 will NOT be used!

for box in bboxes:

# (i,j) in SxS grid -> used to assign box to label_matrix

j, i = int(box[1] * self.S), int(box[2] * self.S)

box_class = int(box[0])

# Rescale relative to cell

box[1] = self.S * box[1] - j # x

box[2] = self.S * box[2] - i # y

box[3] = self.S * box[3] # w

box[4] = self.S * box[4] # h

if label_matrix[i,j,20] == 0:

# one-hot vector for class

label_matrix[i, j, box_class] = 1

# confidence for ground-truth = 1

label_matrix[i, j, 20] = 1

# box coordinate

label_matrix[i, j, 21:25] = torch.tensor(box[1:])

return image, label_matrix

model.py

You can reference the architecture from the paper.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

import torch

import torch.nn as nn

import utils

# Import Network Architecture

# net_architecture = utils.read_json('./config.json')

architecture_config = [

(7, 64, 2, 3),

"M",

(3, 192, 1, 1),

"M",

(1, 128, 1, 0),

(3, 256, 1, 1),

(1, 256, 1, 0),

(3, 512, 1, 1),

"M",

[(1, 256, 1, 0), (3, 512, 1, 1), 4],

(1, 512, 1, 0),

(3, 1024, 1, 1),

"M",

[(1, 512, 1, 0), (3, 1024, 1, 1), 2],

(3, 1024, 1, 1),

(3, 1024, 2, 1),

(3, 1024, 1, 1),

(3, 1024, 1, 1),

]

class CNNBlock(nn.Module):

def __init__(self, in_channels, out_channels, **kwargs):

super(CNNBlock, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, bias=False, **kwargs)

self.batchnorm = nn.BatchNorm2d(out_channels)

self.leakyrelu = nn.LeakyReLU(0.1)

def forward(self, x):

return self.leakyrelu(self.batchnorm(self.conv(x)))

class YOLOv1(nn.Module):

def __init__(self, in_channels=3, **kwargs):

super(YOLOv1, self).__init__()

self.architecture = architecture_config

self.in_channels = in_channels

self.darknet = self._create_conv_layers(self.architecture)

self.classifiers = self._create_classifiers(**kwargs)

def forward(self, x):

x = self.darknet(x)

return self.classifiers(torch.flatten(x, start_dim=1))

def _create_conv_layers(self, architecture):

layers = []

in_channels = self.in_channels

for x in architecture:

if type(x) == str:

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

elif type(x) == tuple:

layers += [CNNBlock(

in_channels, x[1], kernel_size=x[0], stride=x[2], padding=x[3]

)]

in_channels = x[1]

elif type(x) == list:

conv1 = x[0] # tuple

conv2 = x[1] # tuple

repeat = x[2] # integer

for _ in range(repeat):

layers += [

CNNBlock(

in_channels, conv1[1], kernel_size=conv1[0], stride=conv1[2], padding=conv1[3]

)

]

layers += [

CNNBlock(

conv1[1], conv2[1], kernel_size=conv2[0], stride=conv2[2], padding=conv2[3]

)

]

in_channels = conv2[1]

return nn.Sequential(*layers)

def _create_classifiers(self, grid_size, num_boxes, num_classes):

S, B, C = grid_size, num_boxes, num_classes

return nn.Sequential(

nn.Flatten(),

nn.Linear(1024 * S * S, 496),

nn.Dropout(0.0),

nn.LeakyReLU(0.1),

nn.Linear(496, S * S * (C + B*5)) # (S, S, 3)

)

loss.py

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

import torch

import torch.nn as nn

from utils import intersection_over_union

class YoloLoss(nn.Module):

def __init__(self, S=7, B=2, C=20, box_format="midpoint"):

super(YoloLoss, self).__init__()

self.S = S

self.B = B

self.C = C

self.box_format = box_format

self.lambda_coord = 5

self.lambda_noobj = 0.5

self.mse = nn.MSELoss(reduction="sum") # according to the paper

def forward(self, preds, labels):

'''

Returns the loss of YOLOv1

Parameters:

preds (tensor): predicted bounding boxes in the shape

(batch_size, S, S, 30)

labels (tensor): ground truth bounding boexes in the shape

(batch_size, S, S, 30)

Returns:

loss (float): The final loss consists of

1. Coordinate Loss

2. Confidence Loss(obj/noobj)

3. Class Loss

0:20 - class label one-hot vector

20 - box1 confidence

21:25 - box1 (x,y,w,h)

25 - box2 confidence

26:30 - box2 (x,y,w,h)

'''

'''

First, we need to determine which box is responsible for detecting the

obj in a specific grid cell, given that an object exists. As stated in

the original paper, only ONE predicted bounding box should be responsible.

This is also a limitation of YOLOv1. The way to determine the responsibility

is to compare both predictions' IoU with the ground truth box and pick

the one with the highest IoU, given an object exists.

'''

preds = preds.reshape(-1, self.S, self.S, self.C + 5 * self.B)

# Ground truth coordinates, class one-hot vector, confidence

gt_coord = labels[..., 21:25]

gt_class = labels[..., 0:20]

gt_confidence = labels[..., 20:21]

# Same as confidence..but denote Identity

Iobj = labels[..., 20:21]

# COORDINATES for box 1, 2

box1_coord = preds[..., 21:25]

box2_coord = preds[..., 26:30]

# CLASS LABEL one-hot vector

pred_class = preds[..., 0:20]

# CONFIDENCE for box 1,2

box1_confidence = preds[..., 20:21]

box2_confidence = preds[..., 25:26]

# IoU score for box 1,2

box1_iou = intersection_over_union(

box1_coord,

gt_coord,

box_format=self.box_format

)

box2_iou = intersection_over_union(

box2_coord,

gt_coord,

box_format=self.box_format

)

iou_combined = torch.cat(

(box1_iou, box2_iou),

dim = -1

)

# select best box with higher IoU

# (batch_size, S, S, 1) -> 0 or 1

best_box_num = iou_combined.argmax(

dim = -1, keepdim=True

)

# BEST box confidence

best_box_confidence = (

(1 - best_box_num) * box1_confidence

+ best_box_num * box2_confidence

)

# BEST box coordinates (x,y,w,h)

# (batch size, S, S, 4)

best_box_coord = (

(1 - best_box_num) * box1_coord # if 0

+ best_box_num * box2_coord # if 1

)

##############################

# COORDINATE LOSS #

##############################

torch.autograd.set_detect_anomaly(True)

best_box_coord[...,2:4] = torch.sign(best_box_coord[...,2:4]) * torch.sqrt(

torch.abs(best_box_coord[...,2:4] + 1e-6)

)

gt_coord[...,2:4] = torch.sqrt(gt_coord[...,2:4])

coord_loss = self.lambda_coord * self.mse(

torch.flatten(Iobj * best_box_coord, end_dim=-2),

torch.flatten(Iobj * gt_coord, end_dim=-2)

)

##############################

# CONFIDENCE LOSS #

##############################

# If YES object

obj_confidence_loss = self.mse(

torch.flatten(Iobj * best_box_confidence, end_dim=-2),

torch.flatten(Iobj * gt_confidence,end_dim=-2)

)

# If NO object

noobj_confidence_loss = self.mse(

torch.flatten((1 - Iobj) * box1_confidence, end_dim=-2),

torch.flatten((1 - Iobj) * gt_confidence, end_dim=-2)

)

noobj_confidence_loss += self.mse(

torch.flatten((1 - Iobj) * box2_confidence, end_dim=-2),

torch.flatten((1 - Iobj) * gt_confidence, end_dim=-2)

)

confidence_loss = (

obj_confidence_loss

+ self.lambda_noobj * noobj_confidence_loss

)

##############################

# CLASS LOSS #

##############################

class_loss = self.mse(

torch.flatten(Iobj * pred_class, end_dim=-2),

torch.flatten(Iobj * gt_class, end_dim=-2)

)

return coord_loss + confidence_loss + class_loss

utils.py

I personally think that utils.py was the hardest to implement. This module includes non-max suppression, IoU, mean average precision(mAP), converting model output to the list of bounding boxes.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

388

389

390

391

392

393

394

395

396

397

398

399

400

401

402

403

404

405

406

407

408

409

410

411

412

413

414

415

416

417

418

419

420

421

422

423

424

425

426

427

428

429

430

431

432

import torch

from collections import Counter

from dataset import VOCDataset

from torch.utils.data import DataLoader

from torchvision import transforms

import matplotlib.pyplot as plt

import matplotlib.patches as patches

import numpy as np

def intersection_over_union(pred_bboxes, target_bboxes,

box_format="midpoint"):

'''

Compute Intersection over Union

Parameters:

pred_bboxes (tensor): Predicted bounding boxes (BATCH_SIZE, 4)

target_bboxes (tensor): Target bounding boxes (BATCH_SIZE, 4)

box_format (str): corners or midpoint

corners: [x1,y1,x2,y2]

midpoint: [x,y,w,h]

Return:

IoU (scalar tensor): for ALL examples (BATCH_SIZE, 1)

'''

if not torch.is_tensor(pred_bboxes):

pred_bboxes = torch.tensor(pred_bboxes)

if not torch.is_tensor(target_bboxes):

target_bboxes = torch.tensor(target_bboxes)

if box_format == "midpoint":

box1_x1 = pred_bboxes[..., 0:1] - pred_bboxes[..., 2:3] / 2

box1_y1 = pred_bboxes[..., 1:2] - pred_bboxes[..., 3:4] / 2

box1_x2 = pred_bboxes[..., 0:1] + pred_bboxes[..., 2:3] / 2

box1_y2 = pred_bboxes[..., 1:2] + pred_bboxes[..., 3:4] / 2

box2_x1 = target_bboxes[..., 0:1] - target_bboxes[..., 2:3] / 2

box2_y1 = target_bboxes[..., 1:2] - target_bboxes[..., 3:4] / 2

box2_x2 = target_bboxes[..., 0:1] + target_bboxes[..., 2:3] / 2

box2_y2 = target_bboxes[..., 1:2] + target_bboxes[..., 3:4] / 2

elif box_format == "corners":

box1_x1 = pred_bboxes[..., 0:1]

box1_y1 = pred_bboxes[..., 1:2]

box1_x2 = pred_bboxes[..., 2:3]

box1_y2 = pred_bboxes[..., 3:4]

box2_x1 = target_bboxes[..., 0:1]

box2_y1 = target_bboxes[..., 1:2]

box2_x2 = target_bboxes[..., 2:3]

box2_y2 = target_bboxes[..., 3:4]

cross_x1 = torch.max(box1_x1, box2_x1)

cross_y1 = torch.max(box1_y1, box2_y1)

cross_x2 = torch.min(box1_x2, box2_x2)

cross_y2 = torch.min(box1_y2, box2_y2)

# For non-overlapping boxes, clamp to 0 so that IoU=0

intersection = (cross_x2 - cross_x1).clamp(0) * (cross_y2 - cross_y1).clamp(0)

union = (

(box1_x2 - box1_x1) * (box1_y2 - box1_y1)

+ (box2_x2 - box2_x1) * (box2_y2 - box2_y1)

- intersection

)

return intersection / (union + 1e-6)

def non_max_suppression(

bboxes, iou_threshold, confidence_threshold, box_format="midpoint"

):

'''

Performs Non Max Suppression on the given list of bounding boxes

Parameters:

bboxes (list): list[(class_prediction, confidence, x, y, w, h)]

iou_threshold (float): minimum IoU required for predicted bbox to be correct

confidence_threshold (float): minimum confidence required for predicted bbox.

all bboxes below this confidence are removed in prior to nms

box_format (str): "corners" or "midpoint"

Return:

list: A list of bboxes after NMS performed

'''

nms_boxes = []

assert type(bboxes) == list

# [1] Remove all bboxes with confidence < confidence_threshold

bboxes = [box for box in bboxes if box[1] > confidence_threshold]

# [2] Sort bboxes for confidence in descending order

bboxes.sort(key=lambda x: x[1], reverse=True)

# [3] Perform nms for "EACH" class

while(bboxes):

top_box = bboxes.pop(0)

nms_boxes.append(top_box)

# [3-1] Don't compare if different class

# [3-2] Only "leave" boxes with iou < iou_threshold

bboxes = [box for box in bboxes

if box[0] != top_box[0]

or intersection_over_union(

torch.tensor(box[2:]),

torch.tensor(top_box[2:]),

box_format=box_format

) < iou_threshold

]

return nms_boxes

def mean_average_precision(

pred_boxes, true_boxes, iou_threshold=0.5, box_format="midpoint",

num_classes=20

):

'''

Calculates mAP for given predicted boxes and true boxes

Parameters:

pred_boxes (list): list of bounding boxes

- (image idx, class, confidence, x, y, w, h)

true_boxes (list): list of ground truth boudning boxes

iou_threshold (float): minimum iou required for bbox to be correct

box_format (str): "corners" or "midpoint"

num_classes (int): number of classes

Returns:

mAP (float): mAP across "all" classes

'''

# [NOTE] The ultimate goal is to find TP & FP for each pred_boxes with class c

# average precisions -> later will be averaged = mAP

average_precisions = []

for c in range(num_classes):

# pred_boxes for current class c

class_pred_boxes = [

box for box in pred_boxes

if box[1] == c

]

# true_boxes for current class c

class_gt_boxes = [

box for box in true_boxes

if box[1] == c

]

# If there's no gt box, skip

if len(class_gt_boxes) == 0:

continue

# Build a frequency dictionary for each image index

# This tells how many true boxes per image index

gt_visited = Counter([

gt[0] for gt in class_gt_boxes

])

# convert value: num_boxes -> [0] * num_boxes

# to make visited array for each box

for key, val in gt_visited.items():

gt_visited[key] = torch.zeros(val)

# Time to calculate TP/FP.

# First, sort class_pred_boxes w.r.t confidence

class_pred_boxes.sort(key=lambda x: x[2], reverse=True)

TP = torch.zeros(len(class_pred_boxes))

FP = torch.zeros(len(class_pred_boxes))

total_gt_boxes = len(class_gt_boxes)

for detection_idx, detection in enumerate(class_pred_boxes):

best_iou = 0

best_iou_gt_idx = None

# GT boxes for SAME image and SAME class

same_image_class_gt_boxes = [

box for box in class_gt_boxes

if box[0] == detection[0]

]

for gt_idx, gt in enumerate(same_image_class_gt_boxes):

iou = intersection_over_union(

torch.tensor(detection[3:]),

torch.tensor(gt[3:]),

box_format=box_format

)

if iou > best_iou:

best_iou = iou

best_iou_gt_idx = gt_idx

if best_iou > iou_threshold:

# If not visited, then the current predicted detection is correct!

if gt_visited[detection[0]][best_iou_gt_idx] == 0:

gt_visited[detection[0]][best_iou_gt_idx] = 1

TP[detection_idx] = 1

else: # If already visited, then the current predicted detection is incorrect!

FP[detection_idx] = 1

else: # If best iou < threshold, then the current pred detection failed!

FP[detection_idx] = 1

# Now, we found all TP, FP for current class

TP_cumsum = torch.cumsum(TP, dim=0)

FP_cumsum = torch.cumsum(FP, dim=0)

precisions = TP_cumsum / (TP_cumsum + FP_cumsum + 1e-6)

recalls = TP_cumsum / (total_gt_boxes + 1e-6)

precisions = torch.cat(

(torch.tensor([1]), precisions)

)

recalls = torch.cat(

(torch.tensor([0]), recalls)

)

average_precisions.append(torch.trapz(precisions, recalls))

return sum(average_precisions) / len(average_precisions)

def get_bboxes(

loader, model,

iou_threshold, confidence_threshold,

box_format="midpoint",

device="cuda",

S=7

):

'''

Returns a tuple of list of all the bounding boxes information for both prediction

boxes and ground truth boxes in shape (image idx, class, confidence, x, y, w, h)

Parameters:

loader (generator): DataLoader

model (nn.Module): YOLOv1 model

iou_threshold (float): min. iou required for predicted box

to be correct

confidence_threshold: min. confidence to be a candidate

box_format (str): "corners" or "midpoint",

device (str): "cpu" or "gpu"

Returns:

tuple: (all_pred_boxes, all_gt_boxes)

- decoupled bounding box information accross all batches and examples

for predictions and ground truths

'''

# We're not training

model.eval()

train_idx = 0

# All boxes to return: (train idx, class, confidence, x, y, w, h)

all_pred_boxes = []

all_gt_boxes = []

# For each BATCH

for batch_idx, (images, labels) in enumerate(loader):

images = images.to(device)

labels = labels.to(device)

with torch.no_grad():

predictions = model(images)

print("prediction shape:", predictions.shape)

# after forward(), its shape is (batch_size, S*S*(C+5*B))

batch_size = images.shape[0]

# predictions = (batchsize, S, S, 30)

# each predictions[batchsize, ...] is predictions per ONE IMAGE

# labels = (batchsize, S, S, 30)

pred_boxes = cellboxes_to_list_boxes(predictions)

gt_boxes = cellboxes_to_list_boxes(labels)

# pred_boxes = [list of (class, confidence, x, y, w, h)] * batch_size

# labels = [list of (class, confidence, x, y, w, h)] * batch_size

# for each IMAGE

for idx in range(batch_size):

nms_boxes = non_max_suppression(

pred_boxes[idx],

iou_threshold=iou_threshold,

confidence_threshold=confidence_threshold,

box_format=box_format

)

print(nms_boxes)

# For each PREDICTED BOX

for nms_box in nms_boxes:

# We need "train idx" for mAP

all_pred_boxes.append(

[train_idx] + nms_box

)

# For each GROUND TRUTH BOX

for gt_box in gt_boxes[idx]:

# Many (i,j)th cells in S x S DO NOT have

# ground truth labels!!

if gt_box[1] > confidence_threshold:

all_gt_boxes.append(

[train_idx] + gt_box

)

train_idx += 1

model.train()

return all_pred_boxes, all_gt_boxes

def convert_cellboxes(box_3d, S=7):

'''

Converts (batch_size, S, S, 30) -> (batch_size, S, S, 6)

by selecting the best box among box 1 and 2.

Parameters:

box_3d (tensor): Shape of (batch_size, S, S, 30)

S (int): Grid size

Returns:

tensor: Shape of (batch_size, S, S, 6) where

tensor vector in dim=3 is in the format of

(class, confidence, x, y, w, h)

For 30 vector,

0:20 = class vector

20 = box1 confidence

21:25 = box1 (x,y,w,h)

25 = box2 confidence

26:30 = box2 (x,y,w,h)

'''

batch_size = box_3d.shape[0]

box_3d = box_3d.to("cpu")

box_3d = box_3d.reshape(batch_size, S, S, 30)

converted_boxes = torch.empty(batch_size, S, S, 6)

for i in range(S):

for j in range(S):

# Scale to relative to the whole image rather than cell

box1_cell_x = box_3d[..., i:i+1, j:j+1, 21]

box1_cell_y = box_3d[..., i:i+1, j:j+1, 22]

box1_cell_w = box_3d[..., i:i+1, j:j+1, 23]

box1_cell_h = box_3d[..., i:i+1, j:j+1, 24]

box_3d[..., i:i+1, j:j+1, 21] = (j + box1_cell_x) / S

box_3d[..., i:i+1, j:j+1, 22] = (i + box1_cell_y) / S

box_3d[..., i:i+1, j:j+1, 23] = box1_cell_w / S

box_3d[..., i:i+1, j:j+1, 24] = box1_cell_h / S

box2_cell_x = box_3d[..., i:i+1, j:j+1, 26]

box2_cell_y = box_3d[..., i:i+1, j:j+1, 27]

box2_cell_w = box_3d[..., i:i+1, j:j+1, 28]

box2_cell_h = box_3d[..., i:i+1, j:j+1, 29]

box_3d[..., i:i+1, j:j+1, 26] = (j + box2_cell_x) / S

box_3d[..., i:i+1, j:j+1, 27] = (i + box2_cell_y) / S

box_3d[..., i:i+1, j:j+1, 28] = box2_cell_w / S

box_3d[..., i:i+1, j:j+1, 29] = box2_cell_h / S

# Best Class

best_class = box_3d[..., i:i+1, j:j+1, 0:20].argmax(3, keepdim=True)

# Best confidence

# Besst confidence idx for identity

best_confidence, best_confidence_idx = torch.cat(

(

box_3d[..., i:i+1, j:j+1, 20:21],

box_3d[..., i:i+1, j:j+1, 25:26]

),

dim=3

).max(dim=3, keepdim=True)

boxes1 = box_3d[..., i:i+1, j:j+1, 21:25]

boxes2 = box_3d[..., i:i+1, j:j+1, 26:30]

# Best Box coordinate

best_box = (

(1 - best_confidence_idx) * boxes1

+ best_confidence_idx * boxes2

)

converted_boxes[..., i:i+1, j:j+1, :] = torch.cat(

(

best_class,

best_confidence,

best_box

),

dim=-1

)

return converted_boxes

# input: (128, 7, 7, 30)

# return: [[[class,confidence,x,y,x,y],[],[]],[],...,[]], each element -> all

# boxes per image: 3D

def cellboxes_to_list_boxes(box_3d, S=7):

'''

Returns a list of "list of all bounding box information" in the format of

(class, confidence, x, y, w, h). The length of the output will be the same

as batch_size. Each ELEMENT of output is also a LIST for a particular IMAGE.

Parameters:

box_3d (tensor): Shape of (batch_size, S, S, 30). Each element in dim=0

is a 3D-shaped bounding boxes.

S (int): Grid size

Returns:

list: The length of ouput will be the same as batch_ize.

Each ELEMENT of output is also a 2D LIST for a particular IMAGE.

Each bounding box is (class, confidence, x, y, w, h)

'''

converted_list_boxes = []

batch_size = box_3d.shape[0]

# convert (batch_size,S,S,30) -> (batch_size,S,S,6)

box_3d = convert_cellboxes(box_3d)

print("box_3d in cellboxes_to_list_boxes:",box_3d.shape)

box_3d = box_3d.reshape(batch_size, S*S, -1)

box_3d[..., 0] = box_3d[..., 0].long()

for img_idx in range(batch_size):

img_list_boxes = []

for box_idx in range(S*S):

img_list_boxes.append([x.item() for x in box_3d[img_idx, box_idx, :]])

converted_list_boxes.append(img_list_boxes)

return converted_list_boxes

def save_checkpoint(state, fname="my_checkpoint.pth.tar"):

print("=> Saving Checkpoint...")

torch.save(state, fname)

def load_checkpoint(checkpoint, model, optimizer):

print("=> Loading Checkpoint...")

model.load_state_dict(checkpoint['model_state_dict'])

optimizer.load_state_dict(checkpoint['optimizer_state_dict'])

train.py

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

import torch

import torchvision.transforms as transforms

import torch.optim as optim

import torchvision.transforms.functional as F

from tqdm import tqdm

from torch.utils.data import DataLoader

from model import YOLOv1

from dataset import VOCDataset

from utils import(

intersection_over_union,

non_max_suppression,

mean_average_precision,

cellboxes_to_list_boxes,

get_bboxes,

save_checkpoint,

load_checkpoint,

)

from loss import YoloLoss

seed = 123

torch.manual_seed(seed)

# Hyperparameters

LEARNING_RATE = 5e-6

DEVICE = 'cuda' if torch.cuda.is_available() else 'cpu'

print(f"Running on {DEVICE}")

BATCH_SIZE = 32

WEIGHT_DECAY = 0

EPOCHS = 3000

NUM_WORKERS = 2

PIN_MEMORY = True

LOAD_MODEL = True

LOAD_MODEL_FILE = "overfit.pth.tar"

IMG_DIR = "data/images"

LABEL_DIR = "data/labels"

max_map = 0

save_map = False

class Compose(object):

def __init__(self, transforms):

self.transforms = transforms

def __call__(self, img, bboxes):

for t in self.transforms:

img, bboxes = t(img), bboxes

return img, bboxes

transform = Compose([transforms.Resize((448,448)), transforms.ToTensor()])

def train_fn(train_loader, model, optimizer, loss_fn):

loop = tqdm(train_loader, leave=True)

mean_loss = []

for batch_idx, (x, y) in enumerate(loop):

x, y = x.to(DEVICE), y.to(DEVICE)

out = model(x)

loss = loss_fn(out, y)

mean_loss.append(loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

# Update the progress bar

loop.set_postfix(loss = loss.item())

print(f"Mean loss was {sum(mean_loss)/len(mean_loss)}")

def main():

model = YOLOv1(grid_size=7, num_boxes=2, num_classes=20).to(DEVICE)

optimizer = optim.Adam(

model.parameters(), lr=LEARNING_RATE, weight_decay=WEIGHT_DECAY

)

loss_fn = YoloLoss()

if LOAD_MODEL:

load_checkpoint(torch.load(LOAD_MODEL_FILE, map_location=DEVICE), model, optimizer)

train_dataset = VOCDataset(

"data/8examples.csv",

transform=transform,

img_dir=IMG_DIR,

label_dir=LABEL_DIR,

)

test_dataset = VOCDataset(

"data/test.csv",

transform=transform,

img_dir=IMG_DIR,

label_dir=LABEL_DIR

)

train_loader = DataLoader(

dataset=train_dataset,

batch_size=BATCH_SIZE,

shuffle=False,

drop_last=False

)

test_loader = DataLoader(

dataset=test_dataset,

batch_size=BATCH_SIZE,

shuffle=True,

drop_last=True

)

for epoch in range(EPOCHS):

pred_boxes, target_boxes = get_bboxes(

train_loader, model, iou_threshold=0.5, confidence_threshold=0.4, device=DEVICE

)

mean_avg_prec = mean_average_precision(

pred_boxes, target_boxes, iou_threshold=0.5, box_format="midpoint"

)

print(f"Train mAP: {mean_avg_prec}")

global max_map

global save_map

if mean_avg_prec > 0.99 and not save_map:

checkpoint = {

"model_state_dict": model.state_dict(),

"optimizer_state_dict": optimizer.state_dict(),

}

save_checkpoint(checkpoint, fname=LOAD_MODEL_FILE)

max_map = mean_avg_prec

save_map = True

train_fn(train_loader, model, optimizer, loss_fn)

if __name__ == "__main__":

main()

plot_image.py

Now let’s test our model to see if it works.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

import torch

from dataset import VOCDataset

import matplotlib.pyplot as plt

import matplotlib.patches as patches

import numpy as np

from model import YOLOv1

from utils import (

cellboxes_to_list_boxes,

non_max_suppression,

)

import time

LOAD_MODEL_FILE = "overfit.pth.tar"

IMG_DIR = "data/images"

LABEL_DIR = "data/labels"

DEVICE = 'cuda' if torch.cuda.is_available() else 'cpu'

classes={

1: 'bicycle',

6: 'car',

7: 'cat',

11: 'dog',

12: 'horse',

14: 'peron'

}

color_list = ['red','blue','brown','rosybrown','lightyellow','aquamarine','mediumslateblue','skyblue','darkorchid','purple','cyan','darkcyan','lime','green','lightsteelblue','cornflowerblue','pink','crimson','peru','chocolate']

class_list = list(range(0,20))

colors = dict(zip(class_list,color_list))

def plot_bbox(image_tensor):

'''

Draw bounding boxes with image

Parameters:

images (tensor): (3, 448, 448)

Returns

None

'''

start_time = time.time()

model = YOLOv1(grid_size=7, num_boxes=2, num_classes=20)

model.load_state_dict(

torch.load(LOAD_MODEL_FILE, map_location=DEVICE)['model_state_dict']

)

# DON'T FORGET!!! training mode might mess up Dropout and BatchNorm

model.eval()

predictions = model(image_tensor.unsqueeze(0))

# 1) Loop through images and predictions

# 2) Plot image first then the prediction

pred_boxes = cellboxes_to_list_boxes(predictions)

pred_boxes_nms = []

for idx in range(len(pred_boxes)):

nms_boxes = non_max_suppression(

pred_boxes[idx],

iou_threshold=0.5,

confidence_threshold=0.5,

box_format="midpoint"

)

pred_boxes_nms.append(nms_boxes)

image = image_tensor.permute(1, 2, 0)

bboxes = pred_boxes_nms[0]

fig, ax = plt.subplots(figsize=(10, 7))

# Convert to np.array to obtain height and width

image = np.array(image)

img_h, img_w, _ = image.shape

ax.imshow(image)

for box in bboxes:

box_class, confidence, x, y, w, h = box

# Need to convert to lower upper x and y

upper_left_x = x - w / 2

upper_left_y = y - h / 2

upper_left_x *= img_w

upper_left_y *= img_h

rect = patches.Rectangle(

(upper_left_x, upper_left_y),

width = w * img_w,

height = h * img_h,

linewidth=3,

edgecolor=colors[box_class],

facecolor='none'

)

ax.add_patch(rect)

ax.text(

x = upper_left_x,

y = upper_left_y - 5,

fontsize=8,

backgroundcolor = colors[box_class],

color = 'black',

s = f"{classes[int(box_class)]}: {confidence:.2f}"

)

ax.set_axis_off()

print(f"Execution Time: {time.time() - start_time} seconds.")

# plt.show(block=False)

plt.axis('off')

plt.draw()

plt.pause(5)

plt.close()